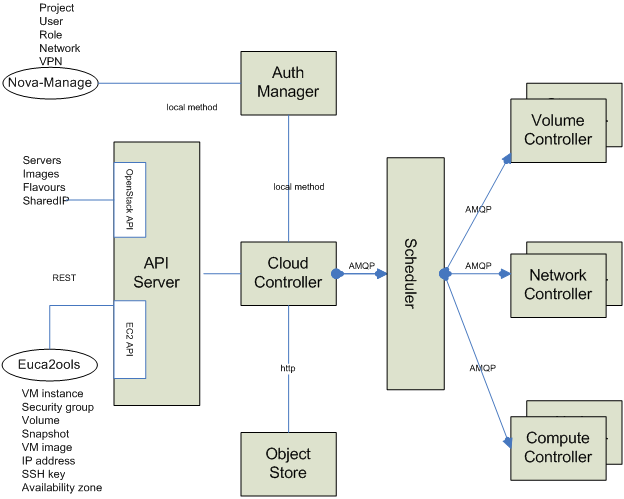

In an OpenStack system, the communication between the compute nodes and the scheduler goes through a messaging system such as RabbitMQ. While there have been different models over the years, the basic assumption has remained that all actors identify themselves to the broker via a password and are trusted from that point forward.

What would happen if a compute node was compromised? The service running on the node could send any message one the bus that it wanted. Some of these messages are not ones that a compute node should ever send, such as “Migrate VM X to Node Y.” If the compromise was delivered via a VM, that hostile VM could then attempt to migrate itself to other nodes and compromise them, or could attempt to migrate other VMs to the compromised nodes and read their contents.

How could we mitigate attacks of this nature?

One way to defend against message based attacks uses the identity of sender to confirm it is authorized to send messages of that type. However, since a compromised node can write any data into the message it wants, we cannot rely on the content of the messages to establish identity.

Lets say we require all messages to be signed. This would be comparable to the CMS based PKI tokens I wrote, and which have since been removed from Keystone. CMS is a PKI based approach to document signing. The payload of a message is hashed, the hash is encrypted with a private key, and the recipient uses the associated public key to verify the message.

While the acronym PKI is short for Public Key Infrastructure, or asymmetric cryptography, it really means X509 based certificates as the means of distributing the public keys, as that provides a way to establish a chain of trust. There has been a lot written about X509, here and elsewhere.

This approach suffers from the key distribution problems as PKI tokens, not to mention the expense of encryption. While the Cryptography story in Python is better than it was back then, we’d still like to avoid building all that additional infrastructure. Especially since, with Transport Layer Security (TLS), the messages are already encrypted across the wire. PKI is not just the certificates themselves, but the totality of the infrastructure for requesting and signing certificates as well.

TLS uses PKI to set up an encrypted network connection. For the vast majority of TLS applications, only the server certificate is required. However, the server can authenticate the client using client certificates. This is called Mutual TLS, or MTLS. This is a more secure way for a client to identify itself than passwords. We will have to handle the certificate distribution. But we need to do that for the server certificates already, so we need some semblance of PKI anyway.

Keystone already has the ability to map an X509 certificate attribute to a user; it was one of the first protocols supported in the Identity Federation effort. Thus. if the service has a “user” record in Keystone, the X509 Client certificate used to set up the MTLS connection can be used to identify that user. We can even assign roles to these users. Thus, all compute node would be assigned users with something like the “compute” role at the service scope.

Probably worthwhile to point out that service:all is a lousy way to scope these privileges. It would be better for the scope to be limited to the Nova service, or better yet, the endpoint. It would be an improvement to even scope them to the region. If regions were projects, we could scope to regions. Anything that can contain other things should be a project. Keystone has too many abstractions that are disjoint. Everything in Keystone should be a project, and live in a unified hierarchy.

If messages only had a “type” field, then the policy rules would attempt to match a role to a message type. Users with the role “compute_scheduler” would be able to send messages of type “migrate VM” but users with the role “compute_node” would not.

I briefly looked in to doing this kind of check with RabbitMQ, and it seems like it would work. QPid gave GSSAPI and Kerberos support, which would be ideal, but I think it used X509 for TLS, not the GSSAPI based encryption, so there would still be the need for PKI. FreeIPA easily supports both, but it woud be nice to get things down to a single type of Cryptography. However, Since QPid support was pulled from OpenStack, it was a non-starter.

Looking to the future, Pulsar and Kafka both seem to support TLS, and multiple types of encryption. Message level policy enforcement could be built right into the platform. This would be useful for a much wider set of applications beyond just OpenStack services.